Laying the Tracks of the Digital Economy: The AI & Cloud Infrastructure Boom

A historical parallel between the industrial railroad boom and the AI & cloud infrastructure expansion shaping the next digital century

The race to build the most advanced cloud computing and AI infrastructure is accelerating at an unprecedented pace.

In their most recent earnings calls, Amazon, Microsoft, and Alphabet (Google) revealed an extraordinary surge in capital expenditures (CapEx), long-term investments like data centers, servers, and chips, aimed at cloud infrastructure and artificial intelligence (AI). The big three cloud providers are plowing tens of billions of dollars to expand their data center footprint, enhance AI capabilities, and maintain their competitive edge. This massive capital outlay evokes parallels to the industrial revolution of the 19th-century. As railroad magnates poured vast sums into laying tracks across America and Europe, reshaping economies and industries, today’s technology giants are investing aggressively to build the rails of the digital economy, global cloud networks and AI infrastructure.

Much like railroads in the 19th century, cloud infrastructure and AI are becoming the backbone of modern economies, enabling innovation, increasing efficiency, and unlocking new business models. The parallel between the two is striking: both require heavy upfront CapEx, promise significant long-term returns, and have the opportunity to transform entire industries.

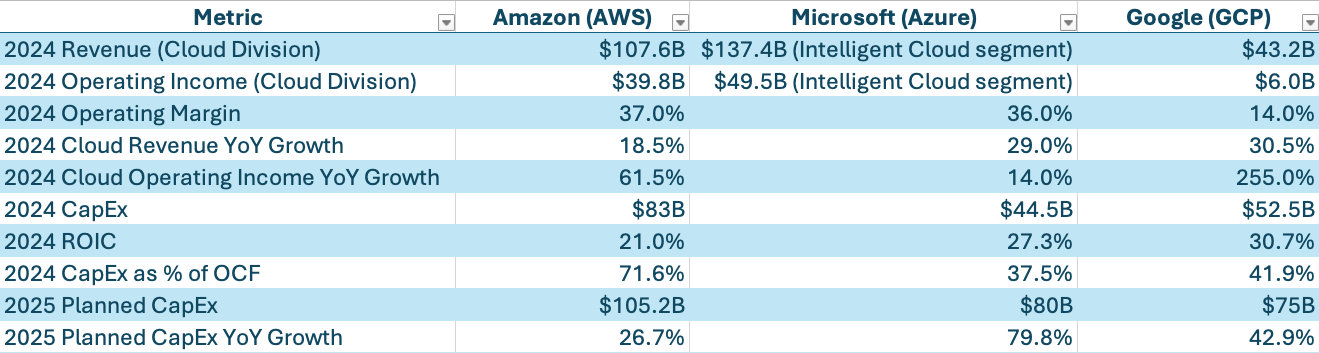

In this article, I examine the Q4 and year-end 2024 financial disclosures of Amazon (AWS), Microsoft (Azure), and Google (GCP), highlighting the guided cloud and AI portions of CapEx for 2025, leadership commentary on strategy, why these important investments are necessary, and what history teaches us about such capital-heavy industries.

What is Cloud Computing, and Why Does It Matter?

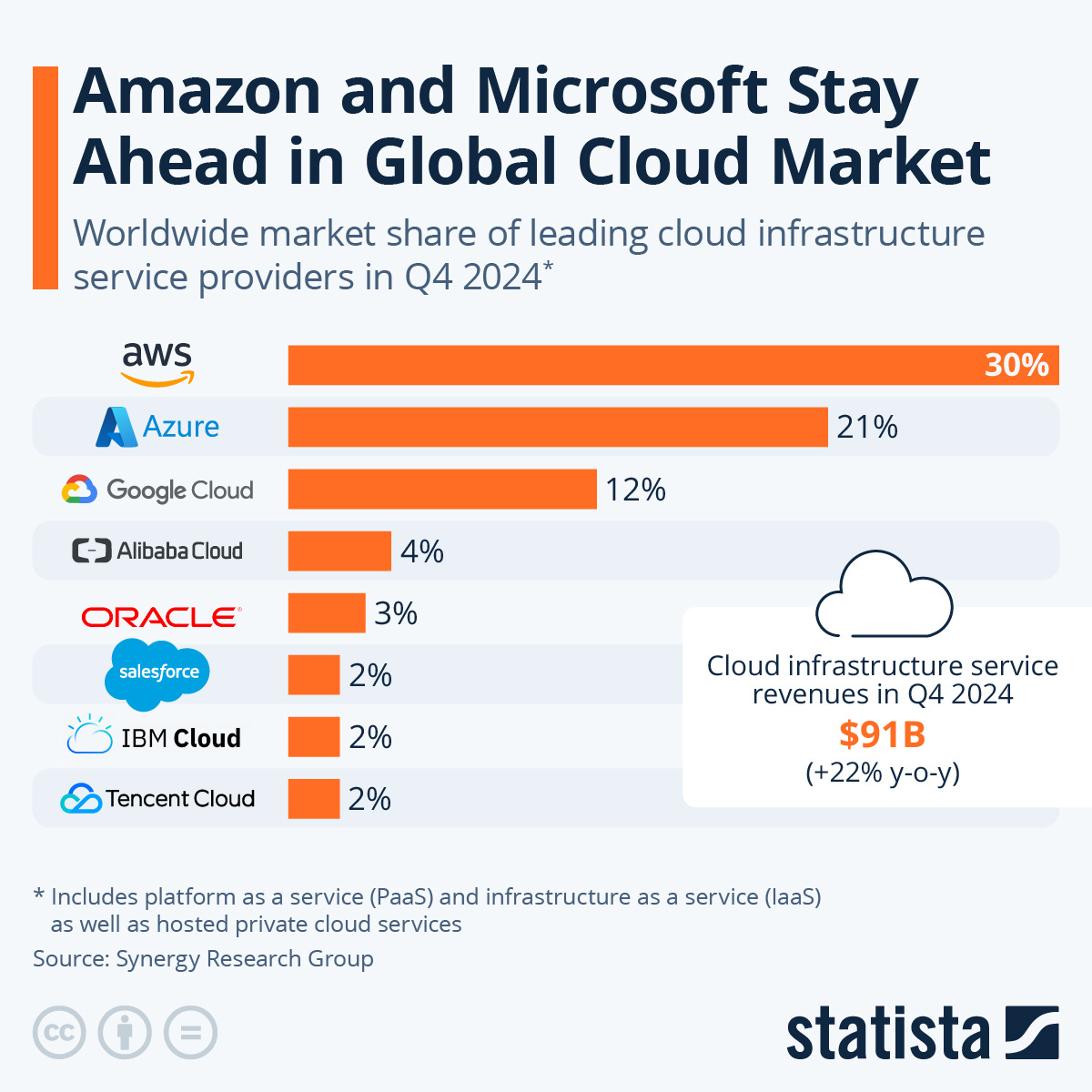

Cloud computing has become the digital backbone of modern business, and now, AI. At the center of this transformation are three dominant players: Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

The public cloud allows companies to offload their IT infrastructure, servers, databases, networking, and now AI capabilities, to third-party providers. Instead of owning and managing this hardware, companies rent access to flexible, secure, and globally distributed computing power.

Put simply, the public cloud is like leasing supercomputers that scale with a company’s needs, without the cost or complexity of building your own infrastructure.

AWS, Azure, and GCP dominate the global cloud market. Amazon is the largest and most transparent in its financial reporting. Google also breaks out detailed cloud metrics. Microsoft, though second in scale, bundles Azure with other services, making direct comparisons harder, but its cloud strength is clear from financial and market data.

Each of the big three entered the cloud race from a different angle:

Amazon had the early insight. Jeff Bezos realized companies did not want to become IT shops just to run digital services. AWS launched in 2006 and built a massive lead by offering cloud infrastructure as a service.

Microsoft, although late to the game, has deep ties with high-profile companies. It rapidly scaled Azure by integrating cloud capabilities into existing software suites and enterprise contracts, gaining adoption through migration and bundling.

Google had the technical infrastructure in place, running Search, YouTube, and other services across distributed data centers, but did not initially think to offer it externally. Once it did, it focused on catching up, often by striking custom deals with AI startups. Today, GCP is growing rapidly and is a key contributor to Google’s profitability.

2025 CapEx Plans at a Glance

The year 2025 is set to shatter records for infrastructure investment by the top three cloud providers.

Each company is dramatically increasing CapEx with much of it directed toward cloud data centers and AI, to maintain leadership in the fast-growing demand for AI services:

Amazon (Amazon Web Services): During the Q4 2024 earnings call, CEO Andy Jassy guided for $105.2 billion in CapEx in 2025, saying:

“[…] we spent $26.3 billion in CapEx in Q4. And I think that is reasonably representative of what you could expect in annualized CapEx rate in 2025. The vast majority of that CapEx spend is on AI for AWS.”

If 2025 CapEx is going to amount to $105.2 billion, that would be a 26.7% increase from 2024’s $83 billion CapEx spent.

Microsoft (Azure): With Microsoft’s fiscal year 2025 ending on June 30, 2025, the last earnings call was the mid-year Q2 2025. During the call, CFO Amy Hood said:

“We expect quarterly spend in Q3 and Q4 to remain at similar levels as our Q2 spend [$22.6 billion including finance leases].”

This signals that Microsoft is expecting to spend around $45 billion more in total by FYE2025. This amounts to an estimated $80 billion investment in CapEx for FY2025, that would be an 79.8% jump from FY2024’s $44.5 billion CapEx spent.

Google (Google Cloud Platform): During the Q4 2024 earnings call, CFO Anat Ashkenazi guided for $75 billion CapEx in 2025, saying:

“[…] invest to scale AI infrastructure and build AI applications […] So the $75 billion in CapEx I mentioned for this year, the majority of that is going to go towards our technical infrastructure, which includes servers and data centers.”

This represents a 42.9% YoY increase, to expand Google’s server and data center footprint for AI and cloud services. By comparison, Google’s CapEx was $52.5 billion in 2024, and just $32.3 billion in 2023. CEO Sundar Pichai commented during the call that this dramatic increase is to “meet that moment” in AI despite unit cost declines.

These staggering figures underscore an AI and cloud infrastructure arms race. The top three cloud providers are prioritizing long-term capacity over short-term earnings, much like railroad companies did when laying down tracks ahead of demand.

Below, I break down each company’s strategy and remarks from leadership about these growth investments.

Amazon Web Services (AWS): Doubling Down on AI Infrastructure

As the pioneer and largest cloud provider, Amazon’s cloud division, Amazon Web Services (AWS), has become a $107.6 billion a year business in revenue converting 37% of it to operating income, and Amazon is reinvesting heavily to keep its lead.

In Q4 2024, Amazon’s CapEx hit $26.3 billion for the quarter, and CFO Brian Olsavsky indicated “that run rate will be reasonably representative” of 2025’s spend. In other words, Amazon expects to spend on the order of $105.2 billion in 2025 on CapEx, with “the majority of the spend will be to support the growing need for technology infrastructure”, primarily AWS and its AI services.

CEO Andy Jassy has repeatedly emphasized that AI and cloud infrastructure are top investment priorities at Amazon, calling the investment “once-in-a-lifetime business opportunity” during the Q4 2024 earnings call:

“[…] when AWS is expanding its CapEx, particularly in what we think is one of these once-in-a-lifetime type of business opportunities like AI represents, I think it’s actually quite a good sign, medium to long term, for the AWS business. And I actually think that spending this capital to pursue this opportunity, which from our perspective, we think virtually every application that we know of today is going to be reinvented with AI inside of it and with inference [AI runtime] being a core building block, just like compute and storage and database.

[…] AI represents, for sure, the biggest opportunity since cloud and probably the biggest technology shift and opportunity in business since the Internet.”

Jassy clarified during the earnings call that AWS’s infrastructure investments are guided by clear signals of customer need, not hopeful projections:

“It’s the way that AWS business works and the way the cash cycle works is that the faster we grow, the more CapEx we end up spending because we have to procure data center and hardware and chips and networking gear ahead of when we’re able to monetize it. We don’t procure it unless we see significant signals of demand.”

Jassy noted that Amazon would have grown more, had they had the capacity to meet the customers’ high demand:

[…] it is true that we could be growing faster, if not for some of the constraints on capacity. And they come in the form of, I would say, chips from our third-party partners, come a little bit slower than before with a lot of midstream changes that take a little bit of time to get the hardware actually yielding the percentage healthy and high-quality servers we expect.”

Jassy’s comment underscores a key constraint facing hyperscalers amid the AI boom: chip supply and hardware readiness. While demand for AWS continues to surge, especially for AI and high-performance workloads, growth is being tempered by delays in receiving chips from third-party partners and the complexities of ensuring high yield and reliability in server production. This highlights the critical role of supply chain resilience and infrastructure agility in scaling AI services effectively.

Jassy also highlighted that falling unit cost in technology does not reduce total spend, instead they stimulate more usage.

“[…] sometimes people make the assumption that if you’re able to decrease the cost of any type of technology component, in this case, we’re really talking about inference [AI runtime], that somehow it’s going to lead to less total spend in technology. And we have never seen that to be the case.

[…] People thought that people would spend a lot less money on infrastructure technology. And what happens is companies will spend a lot less per unit of infrastructure, and that is very, very useful for their businesses. But then they get excited about what else they could build that they always thought was cost-prohibitive before, and they usually end up spending a lot more in total on technology once you make the per unit cost less. And I think that is very much what’s going to happen here in AI, which is the cost of inference [AI runtime] will substantially come down.

[…] if you run a business like we do, where we want to make it as easy as possible for customers to be successful building customer experiences on top of our various infrastructure services, the cost of inference [AI runtime] coming down is going to be very positive for customers and for our business.”

Jassy said, drawing parallels to how lower costs in the early cloud era increased demand:

“We did the same thing in the cloud where we launched AWS in 2006, where we offered S3 object storage for $0.15 a gigabyte and compute for $0.10 an hour, which, of course, is much lower now many years later.”

In line with that philosophy, Amazon is doubling down on AI saying that the vast majority of its ~$105 billion of 2025 expected CapEx spend will go into AI-oriented AWS cloud infrastructure, an increase in spending of 26.7% YoY and the largest CapEx investment to date of the three major cloud providers. This includes funding for Amazon’s custom silicon, the new Trainium 2 and future iteration AI chips to reduce costs and improve AI efficiency, and scaling its AI cloud services Bedrock, Nova models, SageMaker, etc. introduced in 2024.

Such investment comes at a pivotal time for AWS. After a period of moderation in 2020-2022, Amazon trimmed overall CapEx in 2023 on pandemic-era fulfillment overcapacity, AWS is again ramping up infrastructure spend from Q1 2024 to address booming AI demand. Amazon revised the useful life of certain servers and networking equipment down from six years to five years, effective January 1, 2025. This adjustment is expected to decrease operating income by approximately $700 million for the full year 2025. Additionally, Amazon decided to early retire a subset of its servers and networking equipment, recording an expense of approximately $920 million from accelerated depreciation and related charges in Q4 2024, which is anticipated to further decrease operating income by approximately $600 million in 2025. By shortening the useful life of its servers, Amazon is acknowledging the need to refresh hardware faster for next-generation AI workloads.

While not stated explicitly, Amazon’s stance on returning capital to shareholders remains unchanged and it stands in stark contract with its cloud peers. This is implied through Jassy’s and Olsavsky’s repeated emphasis on long-term value creation, seizing generational technological shifts (AI, cloud, robotics), and allocating capital toward sustainable growth rather than distributing it via dividends or share buybacks, a capital allocation approach I firmly support. Jassy emphasized this stance during the earnings call:

“I think that both our business, our customers, and shareholders will be happy medium to long term that we’re pursuing the capital opportunity and the business opportunity in AI.”

Amazon’s message is clear: AI is the new growth driver, and AWS is investing now to ensure it has the capacity and technological edge to capture that growth. As Jassy suggested, lower costs per AI computation will unlock more innovation and more usage, leading to more investment, a virtuous cycle benefiting AWS in the long run. This stance on investment and innovation has made Amazon the pioneer in cloud computing with AWS leading the market share by a substantial margin.

Microsoft Azure: Scaling Globally to Meet AI Demand

Microsoft’s Intelligent Cloud division, which Azure is part of, has generated over $137 billion in revenue in FY2024, converting 36% of it to operating income.

Microsoft, with its deep enterprise relationships, is making similarly enormous investments into its Azure cloud to support surging AI services demand.

In the quarter ending December 31, 2024, Q2 2025 as its fiscal year ends mid-solar year, CFO Amy Hood highlighted the surging demand for the company’s AI offerings, noting that AI services contributed 13 percentage points to Azure’s overall growth. AI services grew 157% YoY, despite Microsoft continuing to face capacity constraints. The remark underscores both the explosive appetite for enterprise AI and the urgent need to expand infrastructure to keep pace:

“Azure and other cloud services revenue grew 31%. Azure growth included 13 points from AI services, which grew 157% year over year and was ahead of expectations even as demand continued to be higher than our available capacity.”

Microsoft, like Amazon, is facing capacity constraints as AI demand continues to outstrip available supply. These parallel challenges at both AWS and Azure reflect a broader trend among hyperscalers: infrastructure build-outs are struggling to keep up with the rapid acceleration of AI workloads, driven by customer adoption and the expanding scale of generative AI applications. Hood reaffirmed Microsoft’s long-term commitment to cloud infrastructure, pointing to strong demand and a sizable backlog as key drivers of ongoing investment past FYE2025:

“In FY26, we expect to continue investing against strong demand signals including customer contracted backlog we need to deliver against across the entirety of our Microsoft Cloud.”

During the most recent quarter, Microsoft spent a record $22.6 billion including finance leases in CapEx, largely driven by Azure and AI-related spending, with more than half of it for long-lived assets that are planned to “support monetization over the next 15 years and beyond”. Hood noted this met the company’s expectations as it races to add data center capacity. In fact, Microsoft’s leadership notified analysts back in mid-2024 that a CAPEX binge was coming to support AI, and they were not exaggerating. Microsoft has partnered closely with OpenAI, integrating AI into its cloud services and deploying thousands of Nvidia GPUs and custom chips to support enterprise AI workloads.

For fiscal year 2025, Microsoft plans to invest about $80 billion in AI-enabled data centers worldwide. Over half of that investment will occur in the United States, reflecting a strategy to ensure domestic capacity for OpenAI and Azure’s growing enterprise AI workloads. President Brad Smith noted in a January 2025 blog post:

“In FY 2025, Microsoft is on track to invest approximately $80 billion to build out AI-enabled datacenters to train AI models and deploy AI and cloud-based applications around the world. More than half of this total investment will be in the United States, reflecting our commitment to this country and our confidence in the American economy.”

This is an astonishing leap: Microsoft’s annual CapEx was under $30 billion in 2023, but the company’s AI momentum including Azure’s OpenAI services and the rollout of Microsoft 365 Copilot has necessitated massive capacity builds. Azure’s growth was ~31% YoY in the latest quarter, with AI services contributing 13 points of that growth. To avoid capacity constraints that could slow this growth, Microsoft is essentially building data centers as fast as it can.

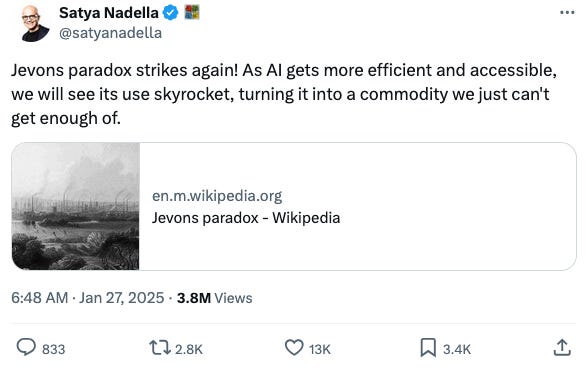

During the Q2 2025 call, CEO Satya Nadella articulated his insight into the economics of AI infrastructure. As advancements in both hardware and software rapidly drive down the cost of AI training and inference, the resulting efficiency gains do not dampen demand, they ignite it. Nadella emphasized that AI is following a compounding curve of improvement, where every generation of chips and models delivers exponential leaps in performance. This dynamic is not just a technical evolution, but a strategic force reshaping enterprise cloud demand:

“AI scaling laws are continuing to compound across both pre-training and inference-time compute. We ourselves have been seeing significant efficiency gains in both training and inference for years now. On inference, we have typically seen more than 2X price-performance gain for every hardware generation, and more than 10X for every model generation due to software optimizations.”

Nadella encapsulated Microsoft’s strategy by invoking an economic principle from the steam age:

“And, as AI becomes more efficient and accessible, we will see exponentially more demand.”

In other words, improvements such as better chips and optimized models that reduce the cost of AI will not reduce spending; they will spark Jevons Paradox, where efficiency gains drive higher consumption.

In 1865, economist William Jevons observed that making steam engines more efficient did not reduce coal use, it increased it. Cheaper, more accessible power led to broader adoption and higher overall consumption.

This counterintuitive effect, known as the Jevons Paradox, shows that efficiency often drives demand up, not down. DeepSeek’s efficient AI model raised concerns it could dampen demand for chips and cloud infrastructure, but history suggests the opposite: efficiency unlocks new use cases and accelerates demand. As costs continue to decline rapidly, AI adoption is set to grow exponentially and become embedded in companies’ daily operations and customer experiences, driving further demand for cloud computing. Microsoft is positioning Azure to capture that boom. Nadella added:

“[…] much as we have done with the commercial cloud, we are focused on continuously scaling our fleet globally and maintaining the right balance across training and inference [AI runtime], as well as geo distribution.”

This means Microsoft is balancing investments between giant AI training supercomputers for model development and widespread inference infrastructure to deploy AI services at scale.

Microsoft’s aggressive CapEx signals confidence that Azure’s AI leadership, bolstered by its partnership with OpenAI, will translate to long-term cloud dominance. Notably, Microsoft’s CapEx intensity in FY2024-25 has actually surpassed Google’s, a remarkable turnabout for a company once seen as more conservative in capital spending. This underscores that the AI race has reset the playing field, everyone must invest heavily, and Microsoft is clearly willing to spend big to secure its share of the AI cloud future.

Google Cloud Platform (GCP): Investing to “Meet that Moment” in AI

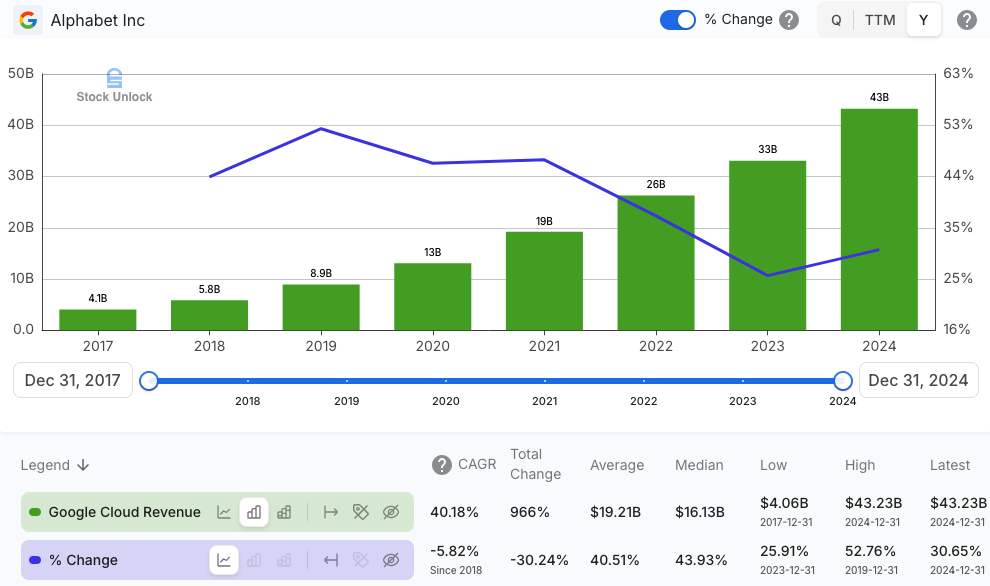

Google Cloud Platform (GCP), while smaller than AWS and Azure, is making aggressive moves to capture market share and has generated $43.2 billion in revenue in 2024, reaching profitability in Q1 2023 and converting 14% of 2024’s revenue to operating income.

Google Cloud division is seeing the fastest CapEx YoY growth among the three. After a relatively modest CapEx of $32.3 billion in 2023 when Google was in efficiency mode, the company significantly ramped up CapEx spending to $52.5 billion in 2024, an increase of 63% YoY. For 2025, CFO Anat Ashkenazi has told investors to expect $75 billion in CapEx, a steep increase of 42.9%, underscoring Google’s commitment to AI infrastructure even at the expense of near-term profits. In a similar vein to Microsoft’s CEO Nadella referencing the Jevons Paradox, during the Q4 2024 earnings call, CEO Sundar Pichai defended the company’s elevated spending, framing it as a long-term investment in AI akin to a once-in-a-generation opportunity to meet that moment:

“[…] the cost of actually using it [AI] is going to keep coming down, which will make more use cases feasible, and that’s the opportunity space. It’s as big as it comes, and that’s why you’re seeing us invest to meet that moment.”

Echoing the same cost-demand logic as his peers, Pichai believes cheaper AI will lead to explosive growth in AI applications, and it wants to ensure its cloud and services can serve all those use cases. Google’s CapEx will largely fund servers and data centers – Ashkenazi noted that “the majority of that is going to go towards our technical infrastructure, which includes servers and data centers” with a particular focus on infrastructure for AI research and cloud AI services. She attributed some of Google Cloud’s Q4 2024 YoY growth deceleration to capacity constraints implying Google Cloud could have grown faster if more AI capacity were already available:

“We do see and have been seeing very strong demand for our AI products in the fourth quarter of 2024. And we exited the year with more demand than we had available capacity.

So we are in a tight supply-demand situation, working very hard to bring more capacity online. As I mentioned, we’ve increased investment in CapEx in 2024, continuing to increase in 2025. And we’ll bring more capacity throughout the year.”

This justification makes the 2025 spending spike sound necessary to capture unmet demand: A sizable portion of Google’s 2025 CapEx will flow into AI-specific hardware, everything from Tensor Processing Units (TPUs) for training its Gemini next-generation models to GPUs for customers’ workloads and expanded data center campuses to house them. Indeed, during Q4 2024 Google unveiled major AI advances including Gemini 2.0, new generative AI features in Search and Workspace, etc., and ensuring the infrastructure can deliver these at global scale is critical. I wrote about this in more detail in a prior post.

These large CapEx investments aim to close the gap in the cloud ecosystem where GCP is in third position by cloud market share, behind AWS and Azure. Google aims to make GCP a go-to platform for AI and is prioritizing long-term AI leadership over short-term margin expansion, a trade-off similar to what its two larger cloud peers are facing. Google clearly sees the AI land grab as underway and is determined to secure its territory by funding infrastructure at a unprecedented scale.

Five-Year CapEx Growth Trajectory (2020-2024)

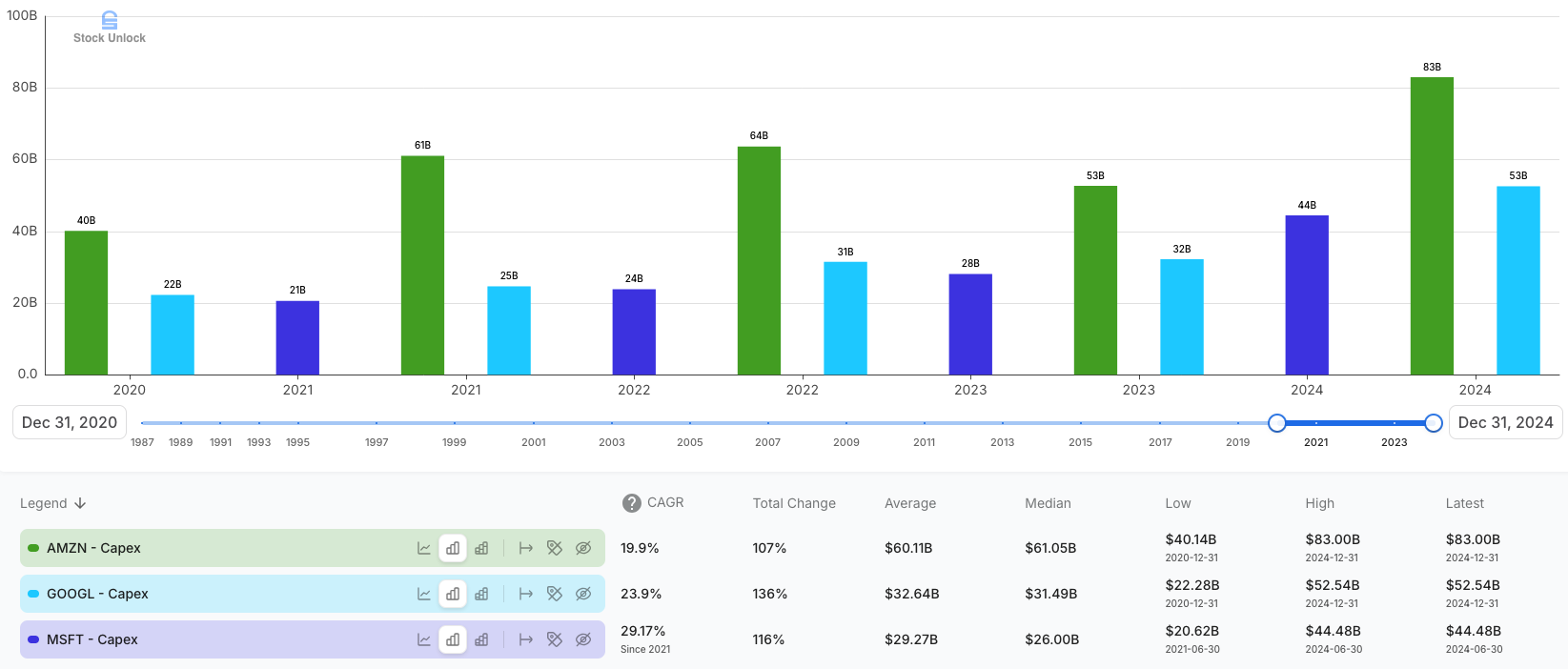

To put these recent moves in perspective, it is helpful to see how far CapEx has come in the last five years. The chart below compares Amazon, Microsoft, and Google on annual CapEx from 2020 through 2024. All three have seen tremendous CapEx growth largely related to their cloud and AI ambitions, particularly in the last two years as generative AI took off.

As shown above, Amazon (green bars) had an early CapEx spike in 2020-2021, partly driven by e-commerce fulfillment build-out in addition to AWS growth, then moderated in 2022-23, and is now soaring to new highs for AI & cloud in 2024-2025. Microsoft (dark blue bars) and Google (light blue bars) had steadier rises until 2022, after which both are accelerating.

Microsoft’s CapEx roughly tripled from year-end 2020 to year-end 2024 (from $17.6 billion to $44.5 billion) and is on track to grow substantially by FY2025 (to $80 billion). Google’s CapEx, relatively flat in 2020-2023 ($22-32 billion), jumped to $52.5 billion in 2024 and is planned at $75 billion for 2025, more than 3x its 2020 level. In absolute terms, Amazon remains the biggest spender, but all three are converging in commitment: by 2025, each is set to invest on the order of tens of billions solely into cloud & AI infrastructure annually. This collective investment is unprecedented in technology history, even the build-out of telecommunication fiber in the 1990s pales in comparison to the scale of cash being deployed now for AI compute capabilities.

Parallels to the 19th Century Railroad Boom

I can’t help but notice the resemblance between today’s cloud & AI build-out and the railroad expansion of the 1800s. During the railroad boom, companies like Union Pacific and Pennsylvania Railroad made colossal capital investments, often far ahead of immediate demand, to construct the rail lines that would eventually unify markets and drive economic growth. The strategic logic then is strikingly similar to now:

Building critical infrastructure ahead of demand: Railroad tycoons laid tracks across vast distances expecting that if you build it, usage will come. Today, cloud providers are erecting giant data centers and networking infrastructure in anticipation of an explosion of AI demand. Just as new rail lines unlocked new transportation use cases (moving goods and people faster and farther), new AI infrastructure is expected to unlock use cases from advanced cloud AI services to ubiquitous AI assistants in every industry. The big three CEOs explicitly note that lower costs spur more demand, reinforcing the need to have capacity ready, much like cheaper railroad fares led to more freight and passenger traffic in the 19th century.

Enormous upfront costs, long-term moats: Both railroads and cloud infrastructure share a characteristic: extremely high fixed costs to build, but relatively low marginal costs to add new customers once built. This means whoever invests early can create a competitive advantage. 19th century rail companies gained quasi-monopolies in regions they connected, and similarly, today’s cloud giants are securing dominance by building global networks of cloud-enabled AI platforms that smaller rivals cannot easily match. The scale of CapEx acts as a barrier to entry. In the railroad era, it was nearly impossible for new competitors to lay parallel tracks once the major routes were claimed. In the cloud era, the big three have a head start and are extending it. Like railroad barons, they are effectively carving up a new economic landscape, the cloud & AI market, amongst themselves, by virtue of out-spending everyone else.

Network effects and land grab: Railroads yielded network effects – each new route made the whole network more valuable, and owning the most extensive network was a strategic goal. Likewise, each new data center region and each AI platform service makes a cloud platform more attractive to customers, drawing in more usage and revenue to justify further expansion. We are witnessing a land grab for AI cloud dominance: Amazon, Microsoft, and Google are racing to establish infrastructure in key geographies and for key AI workloads such as training foundation models, hosting AI startups, enterprise AI integration, etc. This is reminiscent of how railroad companies raced to connect major cities or ports, knowing that controlling that route would yield economic supremacy. In cloud, controlling the infrastructure for global AI model training or hosting the next wave of AI applications could yield outsized influence and profit in the tech-driven economy.

Boom-bust risk: An important parallel and caution is that the railroad boom had its share of booms and busts. Not every rail line built proved profitable; there were periods of over-investment where capacity exceeded demand, leading to bankruptcies, including the Panic of 1873, partly triggered by railroad over-expansion. Similarly, some investors today have some concerns about return on investment (ROI) on AI CapEx if usage does not ramp as fast as expected or if new technologies make infrastructure cheaper. We saw a hint of this concern in early 2025: revelations that a Chinese AI startup, DeepSeek, trained a Large Language Model (LLM) at surprisingly low cost caused some investors to momentarily question if the big three’s sky-high spending was efficient on the premise that if AI were to become dramatically cheaper or if demand fell short, these companies could face a scenario analogous to railroads with empty trains on overbuilt lines. However, big three’s leaderships firmly believes that advancements in AI inference could increase demand, not dampen it and, thus far, they assert that demand is real and growing with capacity constraints being a limiting factor, not a surplus. In essence, they argue this is not a speculative bubble but a prudent investment to alleviate a capacity shortage in the face of transformative technology adoption and I agree with their judgement.

In drawing the analogy, it is clear we are in an era where data centers are the new railroads, and AI models are the new locomotives. The rails being laid are made of fiber optics and silicon rather than steel, but the strategic imperative is similar: secure the routes for data and AI services that others will rely on to conduct their business and just as the railroads revolutionized the economy, enabling cross-regional trade, the rise of new cities, and an acceleration of industry, AI and cloud infrastructure are revolutionizing everything from enterprise software to healthcare, finance, and transportation with intelligent services. Level-headed investors in the 1800s who backed the right railroad companies reaped huge rewards and those who picked the wrong routes lost fortunes. The same may hold true now in the AI & cloud race.

Strategic Implications for Investors

For investors, the current cloud CapEx race presents both opportunity and dilemma. On one hand, these enormous investments underscore that Amazon, Microsoft, and Google expect robust growth in cloud and AI services for years to come. The leaderships’ stances from recent earnings calls reinforce a shared belief: Generative AI and cloud computing are at an inflection point, and scaling up infrastructure now will pay off through dominant market share and revenue streams later. Just as owning key railroad lines yielded pricing power and durable revenue in the industrial age, owning global AI compute infrastructure and superior AI inference algorithms could yield sustained profits in the digital age. I believe in the AI growth thesis and I view these CapEx increases as a positive strategic move – essentially, planting seeds for future cash harvests. Each of these companies has a track record of big bets that paid off: AWS itself was a long-term bet by Amazon, Azure for Microsoft, and Google’s massive data center network powering Search and YouTube are just a handful of Think Big ideas, turned into reality with massive returns for shareholders. The AI era could magnify returns on capital for the winners through cloud subscription revenues, AI service fees, and even industry-wide influence, for example, attracting the best AI startups to build on your platform, an approach similar to how Google is positioning its GCP offering.

CapEx Now, Free Cash Flow Later

On the other hand, the immediate impact is pressure on free cash flow (FCF), which have temper stock performance since the latest financial disclosures. FCF is calculated as operating cash flow (OCF) minus capital expenditures (CapEx), so when CapEx spikes, FCF declines. As a result, market sentiment toward fundamentally sound, dominant businesses, like the big three, have temporarily fade, despite their long-term strength.

The real question to me is not whether they are spending more, it’s whether they will earn high, predictable returns on this incremental investment. Companies that can reinvest capital at returns well above their cost of capital should do so as aggressively as possible. Those that do not, end up destroying shareholder value. Amazon’s reinvestment into AWS have historically delivered exceptional returns, turning CapEx into durable cash-generating engine and so has Microsoft and, more recently, Google.

That principle is now being tested again as Amazon, Microsoft, and Google step up spending. Encouragingly, each company has signaled that their investment decisions are demand-driven and grounded in clear utilization signals. These are not speculative bets, they are capacity expansions backed by real-world usage data and enterprise commitments.

In the railroad analogy, this is like asking: will the trains be full of paying customers? Based on recent earnings calls and the data available, the answer is yes. Amazon has identified over 1,000 active or in-progress use cases for AI across its technology stack, including e-commerce users reviews’ summaries and an AI-powered Alexa experience. Microsoft reported record Azure commitments, driven by OpenAI and enterprise AI adoption, and has its Copilot AI suite being used more than 60% QoQ. Google is deploying its AI technology, Gemini, across its seven core produts and platforms with over two billion users and is currently winning AI startup infrastructure, with over 75% of them building on GCP.

As an investor, I focus on signals of utilization and return on investment: cloud revenue growth, AI workload uptake, stabilization of CapEx as a percentage of revenue, and ultimately a rebound in free cash flow once the build-out plateaus. With AWS, Azure, and GCP, there are signs that these CapEx-heavy investments will translate into long-term and increased cash flows. Returns may take time to materialize, but the combination of high customer retention, massive scale advantages, and recurring revenue makes cloud infrastructure one of the rare business models where large CapEx outlays can generate exceptional long-term payoff. In my view, the intrinsic value of the big three has gone up, not down, following these earnings reports and I am gladly buying more of these exceptional businesses (AMZN 0.00%↑ and GOOGL 0.00%↑) into the sell-off, taking advantage as prices decline given my long-term outlook.

Competitive Dynamics in the AI Cloud Race

Another consideration is competitive dynamics. The big three are each spending at levels only they can afford, which suggests the cloud market could further consolidate. If one significantly out-invests the others in a way that captures outsized market share, we could see a scenario similar to certain railroad routes where one company dominated traffic. Currently, Amazon’s planned ~$105 billion is outpacing Microsoft’s $80 billion and Google’s $75 billion for 2025, implying AWS intends to out-build its rivals and potentially leap ahead in AI capacity. If successful, AWS could offer better pricing or performance that draw more customers, reinforcing its lead. Conversely, Google’s willingness to overshoot expectations in spending shows it is eager to close the gap with AWS and Azure, not cede the field. For investors, this means the cloud landscape is still competitive, and there may not be room for a fourth competitor at this scale. Notably, other cloud providers like Oracle and IBM are far behind in CapEx, and even Meta Platforms (Facebook) is spending ~$30-35 billion on data centers in recent years and focusing inwardly on its AI needs rather than offering public cloud.

Control the Infrastructure, Shape the Economy

In practical terms, the takeaway is that these mega-cap companies are treating AI infrastructure as a long-term moat, even if it creates short-term earnings volatility. It is a classic case of investing through a technological revolution. Level-headed investors with a long horizon might favor companies willing to invest boldly, provided they have a track record of execution as the big three have, as it signals confidence and could yield durable growth. However, it is also wise to be vigilant about signs of diminishing returns – for example, if a company keeps upping CapEx, but cloud & AI revenue growth stalls or their profit margins consistently decline over the years, that could indicate overspending or competitive saturation. So far, each of the big three is still growing their cloud revenues at double digits – AWS +18.7% YoY, Azure +29% YoY, GCP +30.3% YoY, and improving efficiencies by widening the profit margins in their cloud divisions, with an impressive operating income growth for Amazon and Google – AWS +61.5% YoY, Azure +14% YoY, GCP +255% YoY from a small base. Some investors will expect these growth rates to accelerate further in the mid-term given the CapEx boost.

Finally, in the historical analogy, those who control the infrastructure often set the rules and reap the profits. Just as railroad tycoons eventually charged tolls and influenced industries, cloud platform owners may benefit from pricing power for AI services, infrastructure, and data storage, and strategic leverage over the digital economy. There are already talks of AI becoming a commodity – for level-headed investors owning stakes in these companies, it is analogous to owning pieces of the only railroads into a booming new territory. It does not guarantee smooth rides every quarter, but it does mean you are positioned in the value chain’s foundational layer of the digital age.

Final Thoughts

The Q4 2024 (Q2 2025 for Microsoft) earnings calls made one thing crystal clear: the AI & cloud infrastructure race is no longer in the early innings, it’s running at full throttle. Amazon, Microsoft, and Google are each committing between $75 and $105 billion in 2025 CapEx, numbers that would have been unthinkable just a few years ago. Despite the large upfront CapEx required to build data centers and global networks, the long-term economics are incredibly attractive – customers tend to stay locked in for years, if not decades, once they move to a cloud provider, and the margins improve with scale.

Their massive CapEx commitments underscore confidence in AI-driven growth, recognizing that reduced costs and increased efficiency historically stimulate even greater demand. The big three assert that the current capacity constraints justify the aggressive investment; they must build today to capture the immense, still-unfolding demand. These commitments are not short-term moves to juice quarterly numbers, they’re foundational bets on long-term dominance in an AI-powered economy. Just as the railroad barons of the 19th century laid steel rails ahead of demand to shape trade, growth, and geography, today’s cloud giants are laying the digital rails that will shape how intelligence flows through the economy.

History suggests that whoever builds and controls critical infrastructure, whether physical or digital, commands pricing power, shapes innovation, and reaps outsize returns. In this case, the tracks are fiber, the locomotives are AI inference models, and the economic cargo is every business and consumer application touched by intelligence.

As an investor, I see their strategic commitment as a positive long-term signal, despite the short-term pressure on free cash flow. Large-scale cloud infrastructure, customer retention, and recurring revenue models create compelling business economics, setting the stage for sustained returns. Amazon, Microsoft, and Google have already demonstrated the capability to reinvest aggressively at high incremental returns, positioning them to greatly benefit from this generational technological shift, similar to how oil pipelines, telephone wires, and railroads historically became the foundational utilities of modern capitalism.

While near-term volatility may create concerns to some, I believe the intrinsic value of these cloud leaders is rising, not falling. Thus, I am enthusiastically investing into the recent sell-off, leveraging price declines to expand my positions in these exceptional businesses. With a long-term outlook, owning stakes in the infrastructure underpinning the digital and AI-driven future could provide extraordinary rewards, just as early investors in foundational railroads profited from reshaping the industrial economy.

Whether all three will win equally, or whether one pulls ahead like in a winner takes all scenario, remains to be seen. One thing is certain to me: the companies that lay the most tracks today will define the AI economy of tomorrow. For level-headed investors, these are the businesses laying the foundation, not just for the next quarter, or next year earnings, but for the next several decades.